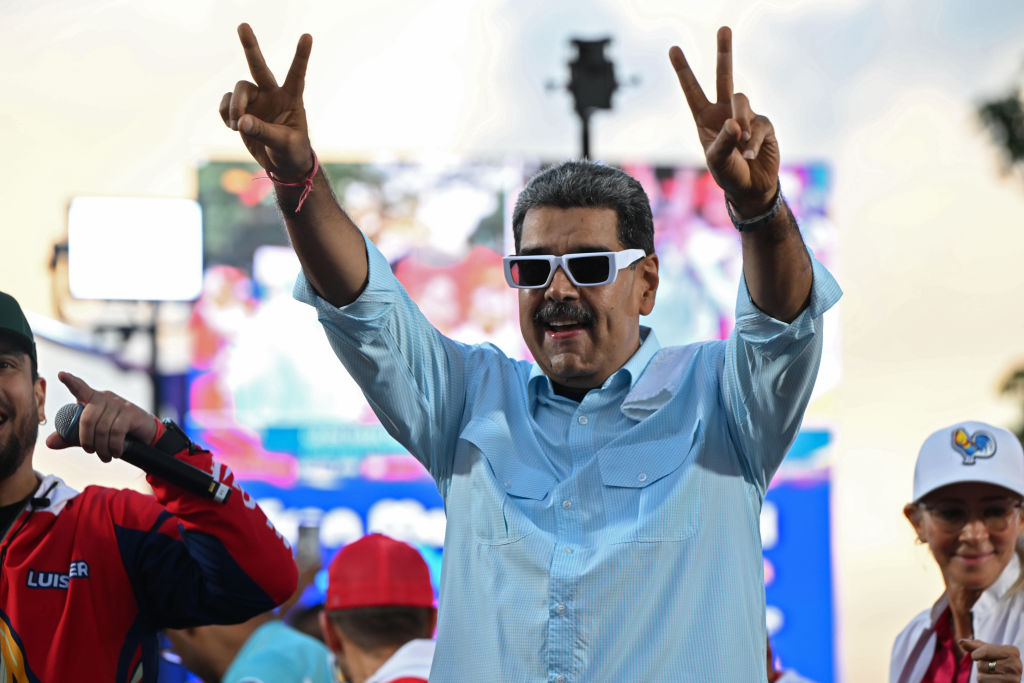

The U.S. military used Anthropic’s AI model Claude during a classified operation that ended with Nicolás Maduro in U.S. custody, according to The Wall Street Journal, an unusually stark example of a commercial chatbot touching a real-world mission against a hostile regime.

The report says Claude was accessed through Palantir systems already embedded across U.S. defense and federal platforms, helping power analysis and decision-support in the raid context. Anthropic’s own usage policies publicly prohibit violent, weapons, or surveillance uses, setting up a direct clash between Silicon Valley guardrails and battlefield reality.

Neither the Pentagon nor Anthropic confirmed operational specifics; Reuters notes it could not independently verify the WSJ account, and key parties declined comment. Still, the episode is reverberating inside Washington because it highlights how AI tools can be pulled into classified environments via contractors even when model providers claim strict limitations.

Venezuela’s government, meanwhile, has framed the incident as an act of U.S. aggression and cited casualties from strikes in Caracas in its own statements, while outside reporting stresses the details remain contested and partially classified. Major AI models are no longer just “office automation”, they’re being operationalized in high-stakes national-security missions.

Stay informed with the latest Jewish news, real-time Jewish breaking news, and in-depth Israel news coverage from our newsroom. For continuing updates, expert perspectives, and trusted reporting, visit our main news hub here.

Israel and the Middle East –

Jewish Politics –

Jewish Culture and Lifestyle –

Videos –

Jewish World

Source link